Deepfakes and democracy: Can we trust what we see online?

TEHRAN - In the past, the saying “seeing is believing” carried weight. A photograph or a video was considered proof of reality. But in today’s digital age, even our eyes can deceive us.

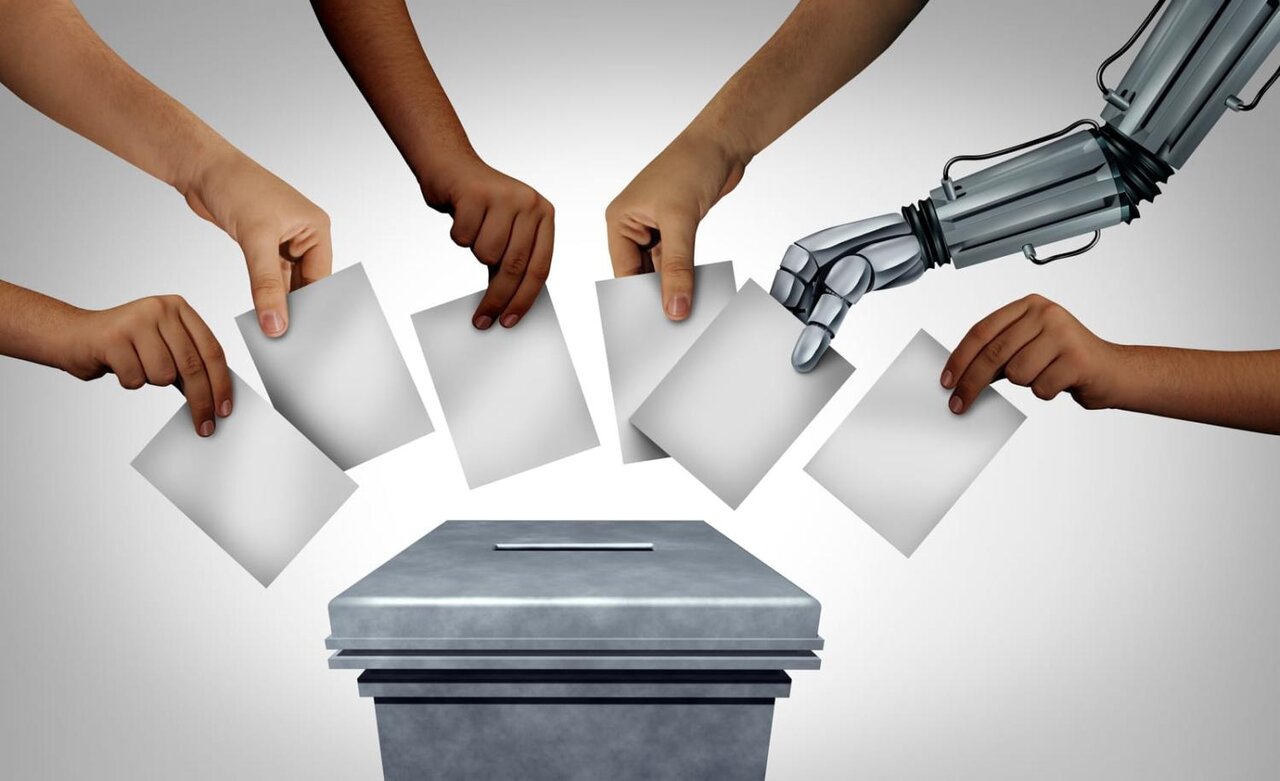

Thanks to the rapid development of artificial intelligence, particularly deepfake technology, images, videos, and even voices can now be fabricated so convincingly that distinguishing fact from fiction becomes nearly impossible. This technological shift is forcing us to question not only what we see online but also how it may affect trust, democracy, and social stability.

Deepfakes are created using advanced AI models that can learn to replicate human faces, voices, and movements with astonishing precision. At first, this technology seemed like an entertaining novelty. Social media platforms became flooded with humorous clips of celebrities singing songs they never performed or actors appearing in roles they never played. But beneath the humor lies a far darker reality. When deepfakes are weaponized, they can spread misinformation, damage reputations, and even influence political outcomes.

The danger of deepfakes is most evident in the political arena. Imagine a video of a world leader announcing military action or conceding defeat in an election, shared widely before fact-checkers can respond. In a polarized environment where misinformation already spreads faster than truth, such fabrications could spark panic, unrest, or even conflict. The 2024 elections in several countries have already witnessed growing concerns about manipulated media, and the stakes will only rise as the technology becomes cheaper and more accessible.

The problem extends beyond politics. Individuals are also at risk, as deepfakes can be used for harassment, blackmail, or identity theft. A convincing fake video can ruin a person’s reputation in minutes, long before they have a chance to defend themselves. For journalists and news outlets, the challenge is even greater. If the authenticity of every photo or video can be questioned, how do we maintain trust in media as a source of truth? This erosion of trust is perhaps the most insidious consequence of deepfakes. It creates an atmosphere where people can dismiss inconvenient truths as “fake” while malicious lies gain traction.

Yet, as with most technologies, deepfakes are not inherently evil. The same tools that can mislead can also be used for creativity and progress. In film production, deepfake technology reduces costs by allowing realistic effects without expensive reshoots. In education, historical figures can be brought “back to life” to engage students. In medicine, AI-driven facial reconstructions can assist patients recovering from trauma. These examples remind us that technology itself is neutral—it is how we choose to use it that defines its impact.

Addressing the ethical and societal risks of deepfakes requires a multi-layered approach. Technology companies are already developing detection tools to identify manipulated content. However, the race between deepfake creators and detectors is constant, and often the fakes spread faster than the truth. Legal frameworks are also beginning to evolve, but laws struggle to keep pace with the speed of innovation. Most importantly, digital literacy among the public is critical. Citizens must learn to question what they see online, verify information from multiple sources, and resist the urge to share sensational content without scrutiny.

At its core, the deepfake debate is not just about technology but about trust. In democratic societies, trust is the glue that holds institutions together, trust in elections, in journalism, in leaders, and in each other.

If that trust collapses under the weight of fabricated realities, the consequences could be severe. At the same time, dismissing deepfakes as purely destructive ignores the opportunities they provide for art, education, and innovation.

We now stand at a crossroads. Either deepfakes become yet another weapon in the arsenal of disinformation, or society learns to adapt, creating safeguards and cultivating resilience. The future will depend not only on new technologies to detect the fakes but also on our collective ability to remain critical, thoughtful, and responsible consumers of information. In an era when seeing is no longer believing, perhaps the real challenge is to build new forms of trust that go deeper than images and videos.

Leave a Comment